AGI And Decoding Destiny

We are at an inflection point which few people fully comprehend

As a researcher and builder of AI systems I have spent a lot of time researching the ‘what and why’ of Artificial Intelligence. The 'what' is driven by how we define AI. I was fortunate enough to coordinate research with hundreds of leading AI developers, including a Nobel Prize winner, a Pulitzer Prize winner, and co-founders of the leading AI labs, in short, those actively building AI and AGI. Some of that research can be read here and here. Why is defining AI important, despite some researchers lack of interest? Simply, if you cannot define it, how can you build it and regulate it?

The What

Whilst not accepted by all labs, the clearest definition of AGI is now starkly presented in the mission of OpenAI:

“Artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work.”

This definition encapsulates both the aspiration and the challenge. AGI is not merely an engineering feat, it is a societal crucible. What does it mean to outperform "humans at most economically valuable work"? At its core, this question invites us to redefine not just intelligence, but value, autonomy, and purpose. AGI, therefore, is not a technological solution; it is a societal inflection point.

The material I’ve gathered from ongoing research outlines a timeline that is startlingly compressed. By the end of 2025, we may witness the arrival of multimodal, agentic PhD-level AIs capable of complex workflows executed with near-instantaneous precision (80% probability). These will be systems that can manage complex processes, collate vast datasets, and implement procedures at speeds no human team could rival. Shortly thereafter, maybe within two years (end of 2027 / 2028), humanoid robotics will enter the scene, bridging the divide between digital intelligence and physical reality.

This is not speculative fantasy, it is the trajectory of current development.

Yet, as the timelines accelerate, so too does the gravity of their implications. Within two years, mathematicians may find themselves eclipsed by machines that excel in symbolic reasoning. Software engineers, while temporarily elevated, will face a redefinition of their roles as AI systems ascend the ladder of abstraction to interface directly in natural language. For knowledge workers across the spectrum, the horizon is both thrilling and disquieting.

But what of the bottlenecks? Compute capacity is one, but it is not the sole arbiter. Algorithmic innovation, the fabled "secret sauce" of leading labs, competes with hardware advances in a race to redefine capability. AI memory capabilities are being upgraded at breathtaking pace. The race among the leading AI research organizations is palpable and abundantly clear.

These labs work with an added layer of strange camaraderie. Researchers swapping insights at conferences, in private gatherings, and on X, many also moving from one lab to the next, even as their employers vie for supremacy. The bottleneck is adoption.

AGI is closer than the public realizes

The societal ramifications, however, are anything but ironic. As these technologies proliferate, governments will be compelled to step in, not always competently. Regulatory frameworks will lag behind innovation, and public opinion, often swayed by AI-driven media, risks becoming a liability rather than a safeguard. The world’s institutions must act with unprecedented foresight, a tall order in an era where short-termism reigns supreme.

As Will Bryk, CEO of Exa AI Lab said:

There are very few people who: a) understand how these systems work b) are able to suppress the "this sounds too crazy" part of their brain c) are able to suppress the "go full hype" part of their brain d) are in SF so hear things on the ground beyond twitter/the news e) actually care about helping the world and are not just trying to sell you something f) recognize the gravity of the situation and treat it as such g) are reasonably competent at converting thoughts into words

The Why

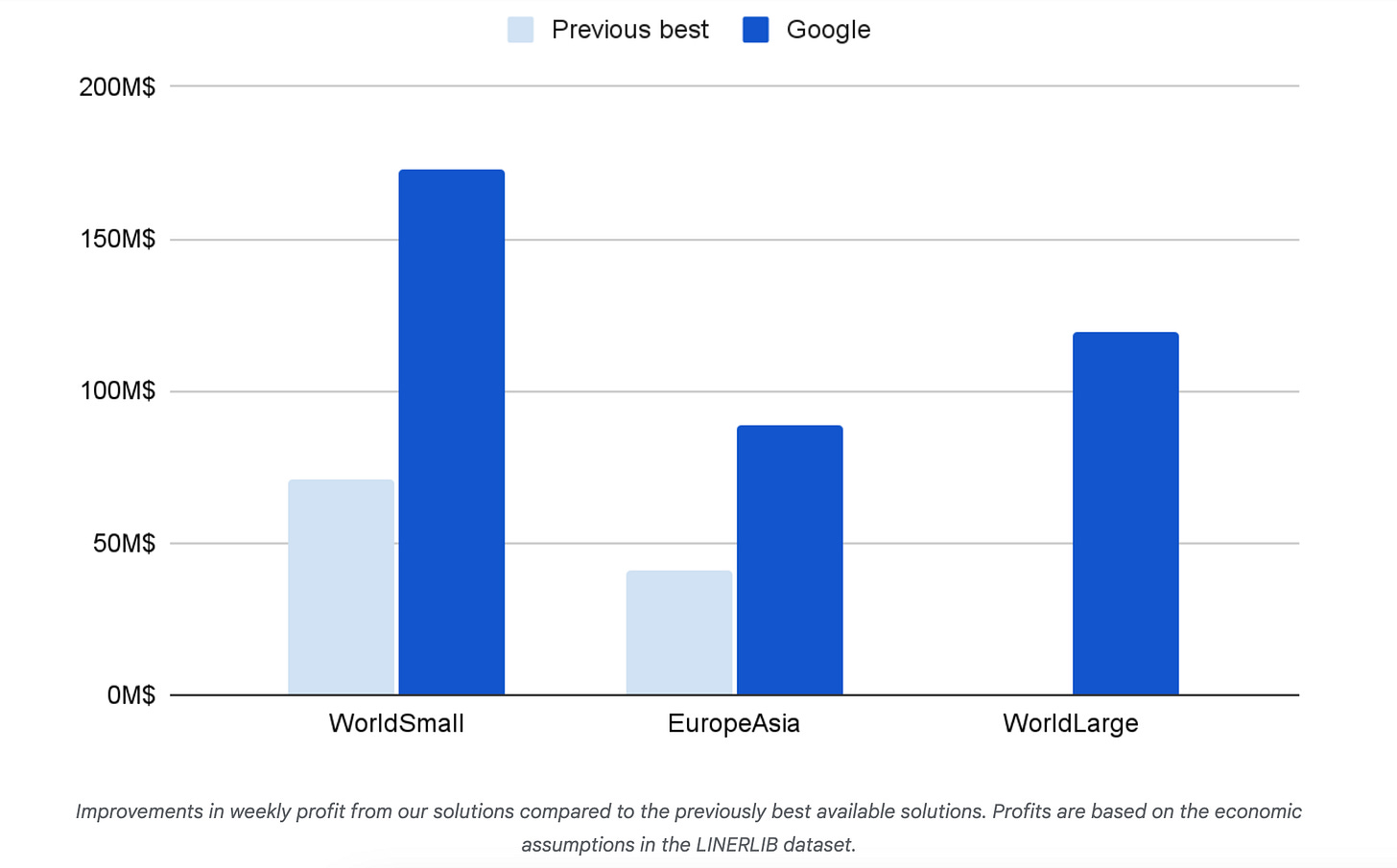

Whilst it may not be immediately apparent, the implementation and employment impact is already underway. AGI offers transformative possibilities, such as creating a perfect tutor capable of providing personalized advice for most subjects, biology-enhancing drugs with zero side effects, super clean energy solutions, and groundbreaking discoveries in fields like physics, chemistry, and astronomy. AI’s real-world applications, like the 40% reduction in Google’s data center cooling costs due to DeepMind’s optimization algorithms, which leverage advanced weather pattern predictions and energy modeling, or the mathematical heuristics used to streamline cargo shipping by optimizing routes that consider ocean currents, fuel consumption, and real-time weather conditions, delivering $150 million profit per ship, per week, offer glimpses of how profoundly these technologies can transform industries. The CEO of Goldman-Sachs related how the work of drafting an S1, the initial registration prospectus for an IPO, might have taken a six-person team two weeks to complete, but it can now be 95 per cent done by AI in minutes. A lawyer from a large US Law Firm wrote that legal drafts that once took 5 hours are down to 45 minutes. Legendary screenwriter and film director, Paul Schrader, has spoken of how ChatGPT is better than any script editor he has worked with and has better ideas i a fraction of the time. I could go on and on with examples.

Image Google improvements in weekly container shipping, more than $150 milion per week, per vessel.

Yet, these successes, compelling as they are, show only the early “last mile” of AI implementation, it seems the public, and corporations, need to see more before they will act.

The real last mile is societal adoption

What happens when AGI is no longer just a tool but an autonomous entity navigating complex workflows? This shift demands more than technical readiness, it demands a reimagining of purpose. Is the end goal efficiency, equity, or something yet unnamed? For corporations it is profits and dividends, so these are no longer questions for engineers alone, they are societal imperatives.

The “last mile” bottlenecks of integration, be it in institutions, public services, or personal workflows, are not trivial. These include resistance to organizational change, the need for workforce retraining to adapt to new technologies, and addressing ethical concerns such as algorithmic bias and transparency in AI decision-making. Take Google’s application of DeepMind’s technologies, the hurdles were not in creating functional algorithms but in convincing organizational hierarchies to trust and implement them. Similarly, AI-driven automation in shipping has faced resistance not from technological limits but from entrenched industry practices. These challenges are harbingers of a broader issue: the lag between capability and adoption.

Action

The custodianship of the future does not rest solely with technologists, it rests with all of us. To be an active custodian of the future, we must participate in public discussions about AI policies, support organizations working on ethical AI initiatives, and educate ourselves and others about the implications of these technologies. By taking these steps, we can ensure a more informed and equitable path forward. To navigate the turbulent waters ahead, we must engage deeply with the ethical, philosophical, and practical dimensions of AGI, shifting our focus from individual success to collective progress. This transition offers an opportunity to redefine meaning in a rapidly evolving world, where contributing to a future that benefits all of humanity becomes a powerful source of purpose. I tell all of my students that this is not a time for passive spectatorship. It is a moment that demands action, reflection, and courage.

Determined Future

In the end, the future of AGI will not be determined by technology alone but by the collective choices we make. These choices will define not just what we build but who we are and become. AGI has the potential to redefine human purpose and identity in profound ways, good and bad. It could inspire new forms of creativity, enabling humans to collaborate with AI to create art, music, and literature that transcend traditional boundaries. Similarly, AGI might foster deeper social connections, as its capabilities could be harnessed to mediate and enrich human interactions. In an AI-augmented society, we might find meaning not only in personal achievements but in the collective progress made possible through this unprecedented partnership with intelligent systems. AGI is inevitable and it is sooner than we think, we still have time to to adapt, and to create a future worth inhabiting.

The ancient Greeks asked of any aspect of life, “Is it true? Is it beautiful? Is it good?” These timeless questions serve as an ethical compass in the development of AGI, urging us to assess not just its technical and economic achievements but its alignment with human values and moral purpose. They remind us to ensure that AGI contributes to a world that upholds truth, fosters beauty in its creations, and advances the collective good. These questions echo across centuries to meet us in the age of AGI, urging us to consider not just the technical and economic implications but the moral and existential ones.

We have the tools, the intellect, and the foresight. The question is whether we have the will to shape society for the collective good.

Stay curious

Colin

I am using AI extensively in multiple areas, including completing a Masters in Education. I also offer to teach it to every person I come in contact with in my daily life, just to get them started. Very few people comprehend what is happening. Even though they may use AI minimally in their work lives, they have yet to use it in their personal lives or see the explosive impact it will have in our society. I recently attended an AI event where a CEO likened this pivotal AI moment to when Frederick Taylor's scientific management was applied to manufacturing and labour jobs. While we might say that that did have some worker benefits, the benefit mostly leaned to the company owners and less so to the workers, who often ended up in soul draining jobs as proverbial cogs in the machines. This is my concern with the advancement of AI if we are not engaged with preventing that.

Thank you I appreciate the post!

One of the key things that come to mind is that there seems to be a decline in the relevance of humans as labourers, as AI takes over some jobs in the near future. As a society, I view this as an opportunity for the unleashing of human capacity, or the end of it.

Currently, the main beneficiaries of this development at least in the short term are companies that can harness AI, just like your Google example. As company profits grow, income concentration also does.

While profit from value is good, there’s a case for reaching a more balanced approach and bringing about more comprehensive social support, from more services to the much debated universal income. For instance, if an artist can dedicate 100% to their art without aiming at profit, this could lead to new understandings of the human experience. Similarly, people would be drawn to work that is meaningful to them, rather than what can “make a living”.

I am excited at the prospect of us as a society at making the right choices. The ones that allow us to live in a more true, beautiful and good world.

Thank you,