Overcoming The Threat of Intelligence Decline

IQ seems to have stagnated and even declined, can AI help boost human intelligence?

First a quick update - once or twice per week I will post an essay on AI and its impact, progress, jobs and human intelligence. The other two or three posts will generally be focused on great scientists, human behavior, ideas on intelligence (and stupidity) and human progress. I’ll aim to issue 4 or 5 posts per week instead of one per day…

Ironically, there is a rather good AI generated audio conversation of this post below.

PART ONE

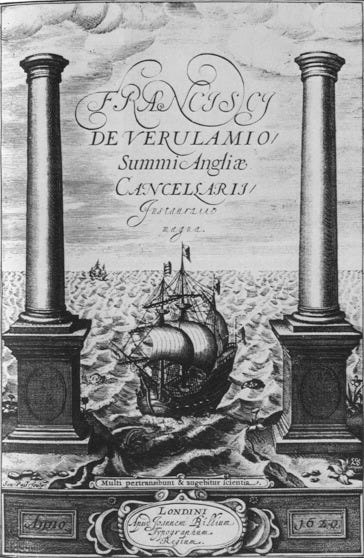

One of the most iconic images of the English Renaissance is the engraved title page of Francis Bacon's Instauratio Magna. This image depicts the ship of learning sailing through the "Pillars of Hercules", the straits of Gibraltar, once the symbolic boundary marking the edge of the known world. The ship, however, is not content to stay within limits, it returns from the vast open seas, bearing the fruits of exploration, new ideas and fresh discoveries. Beneath this powerful image is a quote from the Book of Daniel (12:4) in the Latin Vulgate: Multi pertransibunt et augebitur scientia ("Many shall run to and fro, and knowledge shall be increased"). Bacon made this quote his own, interlacing it into his grand vision of knowledge and learning.

Bacon's personal spin on this biblical passage is evident when he first references it in Valerius Terminus, specifically in the chapter titled "Of the Limits and End of Knowledge." The "levels of knowledge" are numerous and noble, he says, and well within human grasp. His interpretation is characteristic of his belief that there is still a wealth of understanding to be cultivated by humanity, and this, for Bacon, was both a duty and a moral obligation.

The Cycles of Intelligence Growth and Decline

Francis Bacon saw human history as one long, often repetitive cycle of waxing and waning intelligence. In his analysis of history, mankind’s knowledge didn't grow smoothly over time but rather moved through grand revolutions, golden ages where the mind flourished, followed by dark, stagnant periods that erased all progress. The Greeks, the Romans, and then the Renaissance each had their time in the sun, but each was also followed by an era where knowledge hit a plateau or even regressed. Think about the destruction of the Library of Alexandria and the purge of intellectuals.

Francis Bacon’s concern with the loss of knowledge throughout history is a cautionary tale. He saw the destruction of the Library of Alexandria, and other cases, as a profound setback for human intellectual progress, symbolizing the vulnerability of knowledge to time and turmoil. While the extent of the library's lost contents remains debated, Bacon’s insight holds undeniable weight. Societies can experience periods of intellectual decline. These declines often stem from a confluence of political instability, cultural upheaval, and deliberate suppression. A survey of history reveals striking examples where knowledge was not only forgotten but actively destroyed, often with lasting consequences.

The European Dark Ages (5th–10th Centuries)

Following the collapse of the Western Roman Empire, Europe entered a period of fragmentation and instability. The sophisticated infrastructure that had once supported intellectual life, libraries, schools, and networks of scholars, crumbled under the weight of political turmoil. Classical texts were preserved in isolated monasteries, yet access to this reservoir of knowledge was limited, slowing the pace of scientific and philosophical advancements. The contrast between the intellectual vibrancy of the Roman period and the relative stagnation of these centuries is a stark reminder of how societal instability can curtail progress.

The Burning of the House of Wisdom in Baghdad (1258)

The Mongol invasion of Baghdad brought an end to the House of Wisdom, a center of learning that had flourished during the Islamic Golden Age. This institution had been a bastion of intellectual achievement, housing manuscripts and fostering collaboration among scholars from diverse cultures. Its destruction marked a turning point, significantly diminishing the vibrancy of intellectual life in the Islamic world and underscoring how war can obliterate the institutions that sustain knowledge.

The Destruction of Mayan Codices (16th Century)

The Spanish conquest of the Maya civilization brought not only military domination but also cultural obliteration. Spanish clergy, deeming Mayan writings heretical, destroyed most of the codices that detailed the civilization's history, astronomy, mathematics, and other subjects. Only a few survived. The loss of these records significantly impaired modern understanding of Mayan achievements, erasing much of what could have enriched the intellectual sophistication of this advanced society.

Conquest of the Intellectuals

Throughout history, intellectuals have often been targeted during periods of conquest, revolution, or authoritarian rule, as their knowledge and influence are perceived as threats to oppressive regimes. During the Second World War, Nazi forces systematically targeted intellectuals in occupied countries such as Poland, most infamously in the AB-Aktion campaign, where academics, teachers, and other cultural leaders were executed or sent to concentration camps.

Similarly, under the Khmer Rouge in Cambodia (1975–1979), Pol Pot’s regime sought to eliminate perceived elites, leading to the mass execution of teachers, scientists, and anyone associated with education or intellectualism. During the Stalinist purges in the Soviet Union, scholars and scientists were frequently arrested, exiled, or executed as part of the regime's efforts to suppress dissent and consolidate power. Likewise, in modern day Iran, a country that was previously a vanguard of education is now woefully intellectually and culturally suppressed. These examples illustrate how intellectuals, often the bearers of cultural and scientific progress, have repeatedly become targets during periods of ideological fervor, war and political instability.

The Cultural Revolution in China (1966–1976)

Mao Zedong’s Cultural Revolution in China epitomizes the deliberate eradication of intellectual and cultural heritage. Universities were shuttered, intellectuals persecuted, and historical artifacts and texts destroyed in a movement to purge "old ideas." This decade-long disruption to intellectual life not only led to the loss of invaluable historical records but also stymied China's cultural and scientific progress for years, devastating the intellectual ecosystems.

Whilst we currently do not have such global purges, except maybe ISIS in Iraq who destroyed the cultural heritage and ongoing war in Syria. There is, nevertheless, a recorded decline of intelligence in many countries, this is known as the 'Reverse Flynn Effect,' a phenomenon where average intelligence scores have shown signs of decline in certain populations over recent decades.

Decline in Intelligence?

Unlike the previous declines in history, current possible causes of the Reverse Flynn Effect include environmental factors, such as changes in education quality, nutrition, and an increasing reliance on technology, which may reduce the need for certain types of cognitive engagement. AI, in particular, might further contribute to this decline by automating tasks that previously required mental effort, leading to a reduced need for problem-solving, critical thinking, and memory skills. As AI takes over more everyday decision-making and cognitive tasks, there is a risk that these essential cognitive skills could atrophy, leaving us less capable of independent thought and creative problem-solving.

In a meta-analysis examining IQ scores across 31 countries from 1909 to 2013, researchers found that the magnitude of higher IQ scores observed for newer cohorts has declined. Another study found Finnish IQ scores had differed −2.0 IQ points (0.13 SD) from 1997 to 2009, while French IQ scores differed −3.8 IQ points (0.25 SD) from 1999 to 2009. Similar declines are observed in the UK and United States. Question marks remain if IQ declines are due to the emergence of widespread access to digital devices following observed stagnation and declines of ability scores.

This decline raises significant questions about the future trajectory of human intelligence. Is this another turning point in intelligence similar to those that Bacon had observed, where periods of flourishing are followed by regression? And as we struggle to find a way to reverse the reverse in intelligence, will AI help augment intelligence for all, freeing up human cognitive resources for more creative and complex tasks, or will it accelerate this decline further by fostering over-reliance and cognitive stagnation? I certainly know many senior executives on my postgraduate AI programs who admit to ‘becoming lazy and over-relying on the AI’ for cognitive work, failing even to check the output carefully.

Bacon's own mission was to break the cycle of intellectual rise and decline, he didn't want just another spin on the intellectual merry-go-round. He wanted to propel human knowledge forward, to defy previous examples of cognitive stagnation. He wanted to replace the cycle of ups and downs with a steady ascent, a perpetual Instauratio Magna, a Great Renewal. And how did he plan to do it? Through a peculiar mix of intellectual modesty and bold ambition.

Our Thinking

Take, for instance, his famed Idols of the Mind. Bacon categorized human errors in perception into four main types, "Idols of the Tribe" that plagued all humans, "Idols of the Cave" that were individual biases, "Idols of the Market" rooted in language misunderstandings, and "Idols of the Theatre," the dogmas of established philosophy. These "Idols" were the false gods, the deceptive guides that led humanity astray and ensured we kept circling back to ignorance. The first step in Bacon's revolution, then, was to clear out these idols, to face the illusions our minds conjure and, finally, to see nature with unclouded eyes.

Bacon's idols seem strangely at home in our present-day quandary with Artificial Intelligence. Could the systems we build fall prey to the very errors in thinking and biases they were designed to transcend? Or is it possible that, through confronting these idols, we might find a roadmap for humanity and AI that is not only intelligent but truly wise?

In Bacon's world, four idols imprisoned the human intellect:

The Idols of the Tribe, biases rooted in human nature that stem from our shared species-wide inclinations, our tendency to see patterns where none exist, to mistake coincidence for causality.

The Idols of the Cave, biases formed from individual experience, the distorting lenses of upbringing, education, and temperament.

The Idols of the Marketplace, deriving from the deceptive power of words, highlighting how language carries assumptions and baggage.

The Idols of the Theatre, which represent the grand dogmas, the sweeping stories we tell ourselves and each other.

Each of these idols represented a different sort of cognitive illusion, each a trap into which human reasoning invariably fall. And it is in these idols that we may find our way to a new understanding of AI, not as an entity immune from human error, but as one enmeshed with our mental shortcomings and biases, yet yearning to overcome them.

Bacons Idols and AI

Idols of the Tribe. The most advanced neural network today is trained on human data, and therein lies both its promise and its peril. Our datasets are riddled with ghosts of our tribal instincts, tendencies to misinterpret, to exaggerate the familiar, and ignore the foreign. When we train AI on such data, we risk amplifying these biases, making AI faster and more scalable at spreading our flaws. The desire to classify and categorize becomes, in AI, an unchecked compulsion to reduce complexity into simple dichotomies. Are we making AI truly objective, or are we just making our biases more powerful?

Then there are the Idols of the Cave. Machine learning models, like GPT or facial recognition systems, are trained in highly specific datasets, often reflecting the partial truths of their creators. These training datasets become the shadows on the wall, influencing how AI perceives the world. Bacon tells us to challenge our own mental caverns, should we not do the same for AI? Techniques such as incorporating diverse datasets, adversarial testing, and self-diagnostic algorithms could help AI identify and adjust for these inherent limitations, creating a more reflective and resilient system. For example, using diverse datasets in a natural language model has shown significant improvements in reducing biases, as seen in Google's multilingual BERT model, which learns from a wider range of cultural contexts. Similarly, adversarial testing played a crucial role in revealing vulnerabilities in facial recognition systems, such as those identified by Joy Buolamwini in the Gender Shades project, which led to important reforms in the datasets used for training.

The Idols of the Marketplace, derive from the deceptive power of language. Today, we are all too aware of how AI models learn the prejudices inherent in human words. Language, as Bacon knew, carries assumptions that shape meaning and identity. The biases an AI model absorbs are not flaws of the algorithm but reflections of a world where words often deform reality. To free AI from these idols, we must teach it to see language as layered, contradictory, and messy, much like humanity itself. Recent advancements in natural language processing (NLP), such as OpenAI's GPT-4, illustrate how models can be trained to manage ambiguity effectively. By using contextual embeddings and attention mechanisms, these models learn to interpret nuanced meanings, handle contradictions, and provide more context-aware responses, highlighting the complexity inherent in human communication.

Lastly, the Idols of the Theatre represent the grand narratives we create about AI. This also brings us to the broader topic of AI safety, ensuring that as we develop these systems, we also implement safeguards to prevent unintended consequences. Organizations like OpenAI and the Partnership on AI have been advocating for transparency, accountability, and ethical guidelines to make AI development safer for humanity. The myth of AI as an omnipotent oracle, a flawless machine capable of replacing human decision-making, is itself an Idol of the Theatre. AI is not a god-like intelligence but an emergent phenomenon of many parts, statistical, fragmented, and fallible. This is how pioneering neural network Professor Terry Sejnowski described it:

“Something is beginning to happen that was not expected even a few years ago. A threshold was reached, as if a space alien suddenly appeared that could communicate with us in an eerily human way. Only one thing is clear – LLMs are not human. But they are superhuman in their ability to extract information from the world’s database of text. Some aspects of their behavior appear to be intelligent, but if it’s not human intelligence, what is the nature of their intelligence?

In an effort to explain LLMs, critics often dismiss them by saying they are parroting excerpts from the vast database that was used to train them. This is a common misconception”.

This may be true, but as the idol of the theatre shows, we have a belief that AI must know better than we do. In truth, it is as susceptible to errors of the intellect and bias as its creators.

Scientific Reasoning

Bacon's scientific method was more than just collecting observations. It was a system, almost bureaucratic in its rigor. It was not just naive empiricism, the sort where you hoard observations and hope meaning will magically emerge. It was systematic, it was methodical. Bacon believed if we could effectively train the human intellect to think like a disciplined surgeon or architect, one who’s relentless, always revising, cross-referencing, and making sense of observations. For example, AlphaFold, an AI system developed by DeepMind, demonstrates this iterative refinement by using vast datasets and constantly improving predictions of protein structures. Its success shows how combining cross-referencing and iteration can lead to breakthroughs in understanding complex biological phenomena.

Today’s AI

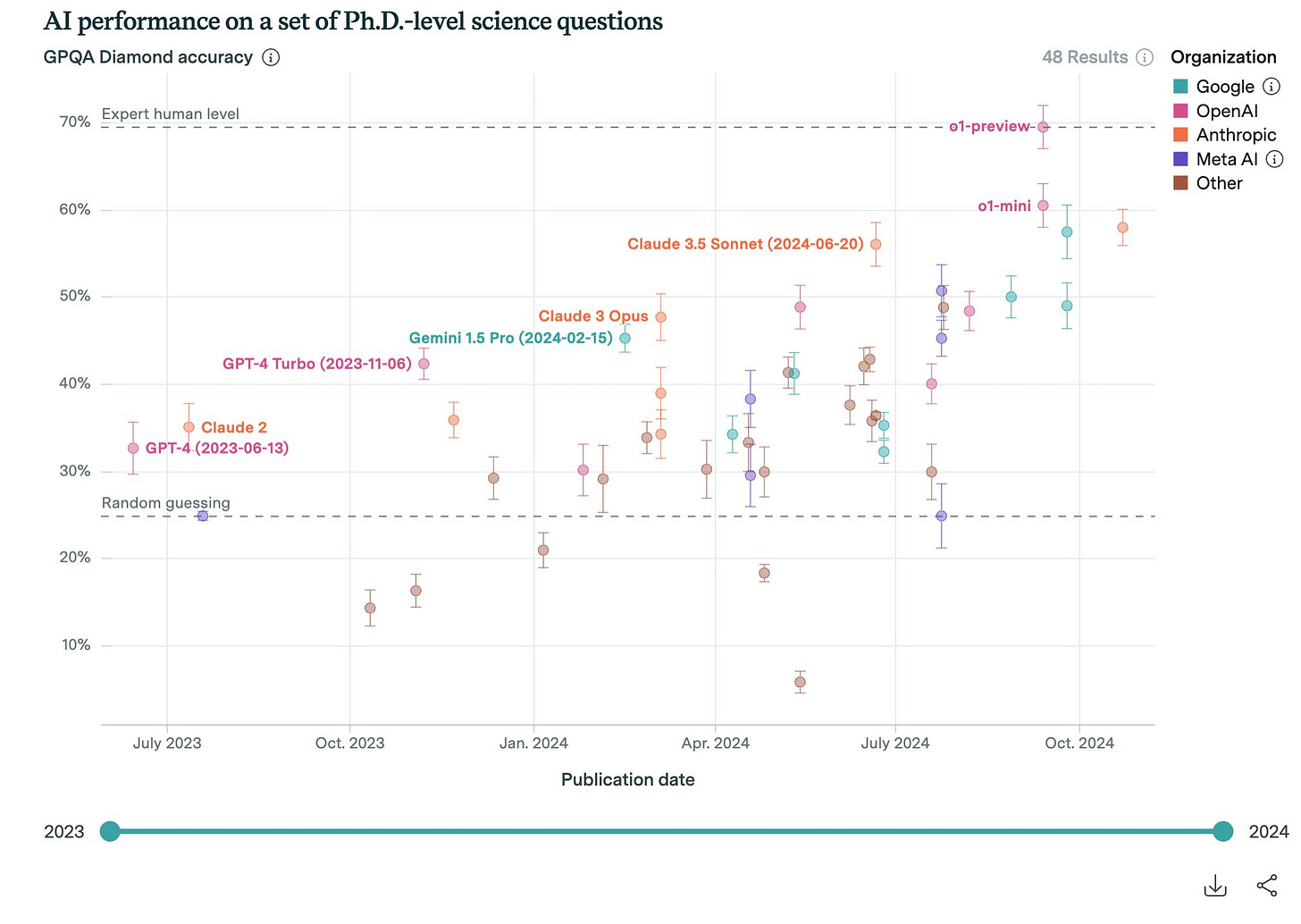

The more I use and see the output of the current models, and with their rapid growth rate which will continue, the more that I believe they are "smart enough" now, and they are rapidly becoming a collective brain.

Image credit below. This is striking, due to the rapid progress and these results reflect across the board Ph.D. level abilities, not individual.

First, these models are sufficiently intelligent to significantly augment and empower cognitive work. They don’t handle every task for you, and that’s by design, not a flaw. With current large models, you feel in control and capable of keeping up. They do, however, need better development to reduce hallucinations, enhanced tooling, and improved reliability and trustworthiness. Specifically, they require calibration to understand their limitations, what they can and cannot do, and what they know versus what they don’t so that they do not confabulate and stay on task. Yet, they don’t need to become vastly smarter to be transformatively useful. Their current capabilities, paired with refinement, are enough to make them powerful tools.

Second, if these models were much smarter, they would likely cause significant disruption. At that point, most people would struggle to keep up. Instead of empowering us, they would displace us in the workplace. Rather than augmenting human capabilities, they would replace them. It’s beneficial that today’s models require us to review their code or analysis for subtle flaws. That process ensures our engagement. However, if they automated 75–90% of tasks across most jobs, human workers would become superfluous. Such a shift wouldn’t be an economic boon but a collapse of our economic and social systems and probably lead to large cognitive decline.

Note, for instance, the recent study by Aidan Toner-Rodgers at MIT which showed that while AI gave significant new research discovery and productivy gains, 82% of users felt less challenged and less job satisfaction ‘due to decreased creativity and skill underutilization’.

As one scientist noted in Aidan’s study:

“While I was impressed by the performance of the [AI tool]...I couldn’t help feeling that much of my education is now worthless. This is not what I was trained to do.”

Moreover, smarter models exacerbate alignment and control problems. We are already seeing glimpses of these with GPT-O1 and similar models. As highlighted in safety analyses, there’s no reason to believe these issues will magically disappear, nor is there evidence that researchers know how to eliminate them.

This calls for a substantial shift in priorities. While some AI labs remain fixated on developing AGI or super-intelligence, others aim to deliver more useful and reliable tools. We should encourage the latter approach. Collaborating with reliable, current AI tools can be deeply enjoyable and productive (with the caveat mentioned above on more powerful models). It’s crucial that we focus on this kind of augmentation, one that enhances human agency rather than replacing it.

This begs the question, where should we be focusing? My belief is that we should be building AI or AGI that helps cure disease, helps to accelerate scientific progress, and solving the major problems in the world. Not an AI that takes away human thinking and everyday cognitive tasks. The What and Why of AI need to be re-thought!

Human Augmentation

There is ‘a recurring pattern of domain experts underestimating the capabilities of AI in their respective fields’. Nevertheless, perhaps as I believe the answer is not to remove humans from work, judgment and decision-making, but to build systems that challenge us to be better, that make us aware of our own limitations (and the AI’s). Bacon's idols were not meant to paralyze humanity with their inevitability but to empower us through awareness. AI can serve a similar function, not as a replacement for human intelligence but as an augmenter.

Cognitive Boost

So, what does Bacon's vision offer us today? We live in a world where information multiplies with dizzying speed. Yet, the guiding principle Bacon gave us remains relevant. Human knowledge must progress. The cycle of intellectual decay can only be broken if we acknowledge the idols that cloud our minds, if we treat discovery not as an individual pursuit of genius but as a shared, disciplined, and cooperative human endeavor. In Bacon's view, true knowledge was never a solitary enterprise, it was the work of collective human effort, built from countless contributions, each cell adding strength, each hand guiding us towards shared discovery.

PART TWO

AI vs Human Intelligence

With technology galloping ahead, pushing into every niche of our world, we must ask a fundamental question. Is artificial intelligence pushing humanity forward, or is it creating a new monster, one that quietly but effectively stifles the art of thinking? Is there a new cycle of Intellectual decline? Are we creating a future in which critical thinking and the human touch in written communication become wistful relics? I believe that this is a high-stakes standoff that demands our scrutiny.

It seems almost paradoxical that artificial intelligence, such a human developed marvel, could ultimately trample over human intelligence and the unique craft of creativity and human thought. The problem, in part, lies in how we see the essence of thinking. Words are more than mechanical arrangements, they are conduits, rich with emotion, meaning, and human intent. Thought, speaking and writing demand more than the ability to string together clever sentences, they demand critical thinking, an art that AI tools, and indeed many humans, have yet to master in a genuine way.

Imagine a young student, head full of half-formed ideas, asked to write a story to suit her Professors predilections. Faced with the option to use an AI tool, this student could easily sidestep the effort, letting AI do the heavy lifting that was once the product of imaginative labor. The result is often homogenized prose, stripped of the distinctive quirks and idiosyncrasies that characterize individual expression, leaving behind writing that sounds as if it could have come from anyone, anywhere. The student’s voice, unique and vibrant, becomes a pale imitation. Superfluous algorithms turn writing into a mechanical process, accurate, yes, but devoid of the pathos that defines human creativity. Such output may be vivid but ultimately lacks the warmth and personality of an author's touch. Is that the future we want? A journey into sterile efficiency, lacking the vibrancy of authentic human connection?

Critical thinking asks us to look beyond the surface, to scrutinize, evaluate, and examine details without falling into empty, facile conclusions. AI, despite its undeniable capabilities, remains inherently mechanical, precise, unerring, but often lacking the depth of nuance that human experience provides. However, as AI continues to evolve, there is the potential for it to develop a semblance of nuance, perhaps by better interpreting context or learning to mimic complex emotional cues. This raises intriguing questions about how AI's role in writing might shift in the near future, from merely a tool to get started to a collaborator capable of contributing deeper, more insightful reflections. The current systems might give us a clever phrase or an apt retort, but its limitations are apparent. The ability to weigh different perspectives, to consider contradictory evidence, or to discern between genuine insights and shallow imitations, these are uniquely human qualities.

There’s no shame in progress, but there is shame in surrender, to become complacent, allowing AI to do our thinking for us. After all they are designed to be ‘thinking machines’. What’s at stake is more than the immediate product of prose or verse. At stake is our ability to think deeply, to feel passionately and to navigate the complexities that define us as human beings. AI can replicate, elucidate, and approximate profundity, but it lacks the uniquely human qualities, such as moral depth and reflective insight, that make thought truly transformative. These limitations currently prevent Large Language Models from achieving genuine creative breakthroughs, which should require empathy, intuition, and a deeply personal engagement with the world.

Who Owns Your Thoughts?

Will we let AI become an overlord dictating our thoughts? We must not, AI is a tool, built as a device and rewarded, to help us carve meaning out of chaos. AI should not replace the pencil, but help to sharpen it. Of course, to reject AI outright, to be fearful and resistant, is not the right choice, that could equally hinder progress. Instead, let’s position AI as a system, useful, but never at the cost of our own intellectual autonomy.

The goal is to confront AI’s advance with clarity of our own thought, to cultivate in ourselves and future generations the ability to be critical thinkers and writers. For instance, we must not allow AI to reduce the thoughtful craft of writing into something merely efficient. Writing, in its essence, is more than the act of putting words on a page, it is a reflection of life. AI might help gather ideas, but only human effort should turn those ideas into something meaningful.

So, what is AI’s role? Is it the harbinger of an inevitable decline, or is it a helpful system for amplifying our creativity? We must not abdicate our responsibility as the primary architects of language. Let AI handle the mundane aspects, the grammar (even then we give up much thought), the technical checks, while we focus on what truly matters, to think, to reflect, to create something lasting. To think critically and not be swallowed up by something that cannot grasp the complexities of ambiguity.

We must be both wise and bold. To face the challenges AI presents with vigilance and an enduring love for writing, language, and thought, for intellectual pursuits.

Let us resist the temptation of laziness and instead embrace the challenge posed by AI with imagination and purpose, proving that human thought, enriched by emotion and insight, remains far from obsolete. We should develop these tools not as destroyers of human intellect but as enhancers of it, advancing scientific discovery and promoting human well-being.

Stay curious

Colin

Consider subscribing or Gifting me a Pot of Tea

So, with a hint of irony below is a NotebookLM generated AI audio conversation based on the above text. It is actually rather good - let the human do the thinking and the AI amplify it?

Image credit – Francis Bacon Novum Organum

PhD Level analysis by Epoch AI

Human Intelligence Decline – Reverse Flynn Effect

If an activity can be cost-effectively automated, it will be. Such is the unstable nature of economic equilibrium and everyone's voting for that to happen with their money.

We will no longer be able to rely on economic coercion to force us into intellectual engagement. The 'augment your intelligence/abilities' angle is pushed by many AI companies but it's not gonna be true for everyone nor forever. Instead what is commonly understood is: Click the button and make the problem go away.

Maybe the world we're gonna be living in, barring any major catastrophe or other blocker along the way, will be one of pure hedonic in-the-moment experience. All problems requiring intellectual or physical effort are solved, but you still exist and experience. May as well go all-in on that then.

Unless consciousness and emotions themselves can also be "disrupted" by AI. Then it's gonna get... weird?

Hello - right now, AI is probably going to replace and cause lower human intelligence -we already are seeing it. One reason why I became very focused on safety was because I realized that human replacement is going to lead to "industrialized dehumanization" and likely extinction.

I'll link you to the appropriate discussion on this, which I think would be of interest to you:

https://forum.effectivealtruism.org/posts/XuoNBrxH4AGoyQEDL/my-theory-of-change-for-working-in-ai-healthtech

If I was going to sum up his very good article in one sentence:

"For lack of a better term, I'll call the attitude underlying this process successionism, referring to the acceptance of machines as a successor species replacing humanity."

I'll like to connect with you since I've been working in this space a lot with AI governance people(and leaders, including someone connected to Sam Altman) to see how we can make this go better.

My email is seancpan@gmail.com.