Code Dependent

On the Frontlines of AI’s Invisible Empire

In an era where the rhetoric of innovation is indistinguishable from statecraft, Code Dependent does not so much warn as it excavates. Madhumita Murgia has not written a treatise. She has offered evidence, damning, intimate, unignorable. Her subject is not artificial intelligence, but the human labor that props up its illusion: not the circuits, but the sweat.

Reading her work is like entering a collapsed mine: you feel the pressure, the depth, the lives sealed inside. She follows the human residue left on AI’s foundations, from the boardrooms of California where euphemism is strategy, to the informal settlements of Nairobi and the fractured tenements of Sofia. What emerges is not novelty, but repetition: another economy running on extraction, another generation gaslit into thinking the algorithm is neutral. AI, she suggests, is simply capitalism’s latest disguise. And its real architects, the data annotators, the moderators, the ‘human-in-the-loop’, remain beneath the surface, unthanked and profoundly necessary.

The subtitle might well have been The Human Infrastructure of Intelligence. The first revelation is that there is no such thing as a purely artificial intelligence. The systems we naively describe as autonomous are, in fact, propped up by an army of precarious, low-wage workers, annotators, moderators, cleaners of the digital gutters. Hiba in Bulgaria. Ian in Kibera. Ala, the beekeeper turned dataset technician. Their hands touch the data that touches our lives. They are not standing at the edge of technological history; they are kneeling beneath it, holding it up. Many of these annotators are casually employed as gig workers by the US$ 15 billion valued Scale.AI.

Moral Architecture

Murgia’s brilliance lies in her method. She does not present AI as a question of technical capacity. She frames it, more accurately, as a question of moral architecture. What do we owe the people whose labor trains our machines, but whose names never appear in our press releases? Who gets to define what counts as intelligence, or contribution, or harm?

To tell this story, she does not rely on abstractions. She gives us doors. And we walk through them into one-bedroom homes in Nairobi where a television flickers with Netflix while a laptop tags bounding boxes around lampposts in driverless car footage. We sit across from a woman labeling oil impurities on her kitchen table in Sofia, steadying her family’s finances while building algorithms that will one day replace her. These are not stories about the future. They are dispatches from the present we choose not to see.

Murgia rightly draws on Zuboff’s “surveillance capitalism” and Couldry and Mejias’ “data colonialism” as explanatory tools. She makes vivid what those terms often obscure: the experience of being surveilled without knowing it, of contributing without consenting, of laboring for systems that will never labor for you. And crucially, she maps how power is not merely abstracted by AI but redistributed, away from people and toward protocol.

Collapse of Agency

One of the most haunting insights in Code Dependent is the collapse of agency. Albert Bandura once argued that human agency is exercised individually, by proxy, and collectively. But Murgia shows how, in an algorithmically governed society, these forms of agency are being steadily undermined. The individual cannot appeal a black-box decision. The proxy, be it doctor, teacher, or caseworker, is beholden to the output of the system. And the collective, fractured by the design of platform economies, struggles to even recognize itself.

Yet there are embers of resistance. Before broadening the scope, Murgia roots her narrative in labor, annotators like Hiba, data technicians like Ala, precarious workers like Ian, whose invisible hands prop up systems that proclaim autonomy. But her lens widens. Not in uprisings or policy memos, but in defiance, awkward solidarities, and the quiet insistence on dignity, Workers like Hiba find ways to assert personhood, even when stripped of power. Lawyers like Mercy Mutemi translate tech policy into labor justice. Poets like Helen Mort fight to reclaim their own likeness. Families like Diana Sardjoe's confront the algorithmic branding of welfare fraud. Murgia shows us that the terrain of AI harm is vast, reaching into health disparities (Ashita Singh, Ziad Obermeyer), police surveillance, and even the theft of personal identity through deepfakes. This is not just labor. It is life. Researchers from outside the Anglo-American epicenter, Birhane, Ricaurte, Miceli, build the epistemic scaffolding that Silicon Valley refused to.

To say that AI systems replicate bias is now a truism. What Murgia shows is that these systems do not simply reflect injustice; they reroute it, digitize it, and distribute it at scale. A facial recognition system doesn’t just fail to recognize Black women, it inherits centuries of economic exclusion and encodes it into a job denial. A language model trained on human creativity does not merely mimic style, it cannibalizes cultural labor and redistributes it to shareholders.

There is a passage that haunts my mind: workers annotating horror, violence, dismemberment, child exploitation, to train content filters for social media, themselves traumatized and discarded. What do we call a system where the pain of the many is processed so the comfort of the few may remain undisturbed?

And this is why Murgia’s book matters. Not because it indicts AI, but because it indicts us. Our indifference. Our inattention. Our willingness to accept progress as something bought, not built. In Code Dependent, we are asked not just to reckon with a future of machines, but with the present of our own complicity.

If there is hope here, and I believe there is, it lies not in better algorithms, but in clearer sight. In the courage to ask, as Murgia does: what kind of intelligence are we training? And for whom?

That interdependence, our mutual entanglement with the systems we build and the people erased by them, is Murgia’s quiet thesis. We are, all of us, codependent. Not just with the systems we build, but with the people we’ve forgotten we depend on. The question is no longer whether AI can think. It’s whether we still can.

Unanswered Questions

Murgia ends not with resolution but with a provocation. She poses the questions we’ve been too seduced or too distracted to ask: What is the limit of human labor that can be made invisible? How much personal sovereignty are we prepared to surrender to systems we cannot interrogate? Who speaks for the algorithmically misclassified, the wrongly denied, the digitally excluded? Can we govern systems that are designed to elude understanding? Will we treat AI as a tool or accept it, quietly, as an authority?

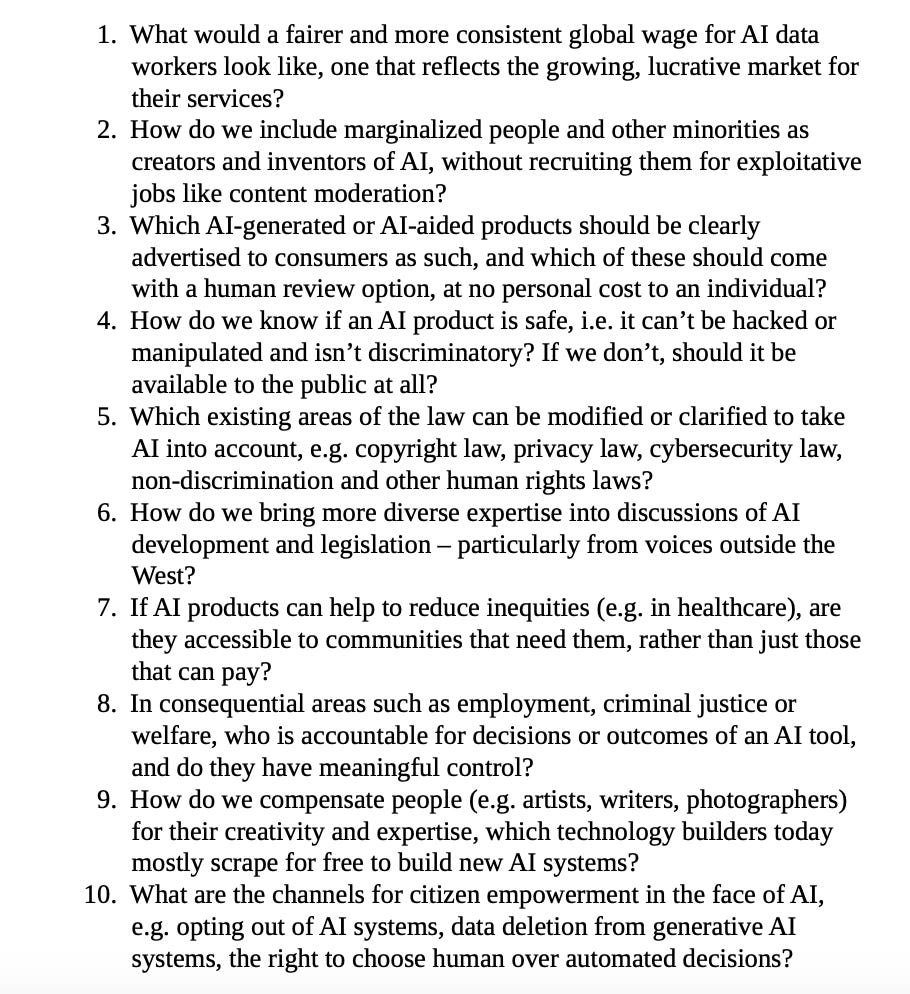

And perhaps most tellingly: How do we regain our footing, not as users or data points, but as citizens, when the terrain itself is being shaped by opaque systems built for profit? These are not rhetorical flourishes; they are demands. And they do not permit neutrality. Yet Murgia does not end with despair. She gestures, cautiously, toward beginnings: Father Paolo Benanti’s Rome Call for AI Ethics, local acts of resistance, and a ten-point checklist for critical engagement. These are not prescriptions but provocations, frameworks for reclaiming our role, not as spectators, but as agents.

Overall I enjoyed the book, it is written in an engaging story style, something to read in 8 hours. She offers no utopia, only the insistence that another future is possible if we choose to act like citizens, not addicted ‘users’.

In the end, Code Dependent is not a book about machines. It is a book about us, what we reward, what we tolerate, and who we’re willing to forget in the name of convenience. Murgia reminds us that if we are living in the shadow of AI, it is because we have not yet looked directly at the people building it, and the structures that keep them there.

Stay curious

Colin

Thank you for this excellent introduction into and review of 'Code Dependent'! I've downloaded the book and started to read... you're right. This is not about AI, this is a book about us!!

In these AI conversations, I've long since had the inkling that all this next level of 'technology is progress' is a continuation of the familiar power distribution ~ colonialism, capitalism, racism...

Madhumita Murgia reveals her unique insights, and she writes about it so well, it's a joy to read.

I love that she sees both sides so clearly:

“... while people are feeling robbed of their individual ability to direct their own actions and attention, AI systems have led, unexpectedly, to a strengthening of collective agency. Ironically, the intrinsic qualities of automated systems – their opaqueness, inflexibility, constantly changing and unregulated nature – are galvanising people to band together and fight back, to reclaim their humanity.”

Truly, BIG THANK YOU, Colin 💙 🙏 ✨

"...where euphemism is strategy..."

Just like current White House policy.

//

"AI, she suggests, is simply capitalism’s latest disguise".

Indeed it is! And not just in the U.S. If Xi Jinping thinks he can control it, he's in for a rude surprise.

//

"...propped up by an army of precarious, low-wage workers..."

The very reason I gave up on software development.

//

"Researchers from outside the Anglo-American epicenter, Birhane, Ricaurte, Miceli, build the epistemic scaffolding that Silicon Valley refused to. To say that AI systems replicate bias is now a truism. What Murgia shows is that these systems do not simply reflect injustice; they reroute it, digitize it, and distribute it at scale"

It seems to me, upon reading this, that current AI systems reflect the MAGA cult mentality.

//

"What do we call a system where the pain of the many is processed so the comfort of the few may remain undisturbed?"

Laissez faire Capitalism and Techno-Feudalism.

//

"...if we are living in the shadow of AI, it is because we have not yet looked directly at the people building it, and the structures that keep them there".

Much of which is the result of deliberate misdirection by the billionaire purveyors.