Russia’s Social Design Agency “utilise an effective combination of whole-of-society propaganda, fear, surveillance, and threats rooted in technological advancements, to shape an almost inescapably toxic information environment.”

Part 5 of a series of essays on Cognitive Warfare (Part 1, 2, 3 and 4 are here, here, here and here)

What if the most effective weapons of the 21st century aren't fired, but forwarded? News articles, memes, videos, tweets, tik-tok’s and a whole host of information. If the 20th century taught states how to conquer territory, the 21st has taught them how to conquer perception. And few have learned that lesson as ruthlessly or as profitably as the Kremlin's contractors in the post-RT era.

The latest report by Pamment and Tsurtsumia, of the Psychological Defence Research Institute, Beyond Operation Doppelgänger: A Capability Assessment of the Social Design Agency, drawing from over 3,000 leaked internal documents of the aptly named Social Design Agency (SDA), is not merely an exposé of disinformation. It's a blueprint of how modern cognitive warfare is less about lying and more about laundering truth into irrelevance.

The central thesis of the report is devastatingly simple: the Doppelgänger campaign, once regarded as a singular Russian disinformation operation, was in fact just one tactic among many in a sprawling, industrialized propaganda apparatus. What Meta and EU institutions mistook for a stand-alone operation was, in reality, a business line within a broader influence factory, a Kremlin-funded network of digital mercenaries whose ultimate currency is attention. Not truth. Not ideology. Not even persuasion. Just attention.

The notorious 'Doppelgänger' campaign, with its cloned media websites of outlets like Bild and The Guardian, was not an isolated operation but a single, headline-grabbing tactic within the SDA's broader ‘Comprehensive Counter-Campaign against Europe’. Its goal was less to deceive and more to be discovered, thereby validating its own relevance to Kremlin funders.

The SDA, it turns out, does not care what you believe. It only cares that you engage. Whether you are enraged or fact-checking or quoting in parliament, you are part of the machine. “Being discovered is success,” Pamment and Tsurtsumia write.

“Being talked about is success. Being discovered is success. Being factchecked, debunked, denounced, is success. Being repeated by politicians, celebrities, and credible news sources is success.”

This is not information war as persuasion. It is cognitive war as market penetration.

And yet to frame this as uniquely Russian is to let ourselves off the hook. Because what the report also shows is that Western responses, counter-FIMI exposés, media coverage, and even academic research, have often become unwilling accomplices.

FIMI stands for “Foreign Information Manipulation and Interference.” It is a term increasingly used by European institutions and security agencies to describe coordinated efforts, typically by hostile state actors like Russia or China, to distort information environments abroad for political, strategic, or ideological gain.

Unlike traditional propaganda, FIMI isn't necessarily about making you believe something false. It's often about:

flooding the information space to overwhelm critical thought,

seeding confusion and distrust toward institutions,

amplifying existing societal divisions,

and eroding the shared foundations of truth that make democratic discourse possible.

Monetization of Your Attention

SDA's great innovation was to invert visibility into validation. The more they were condemned, the more Kremlin rubles flowed into their coffers. A perverse business model was born: propaganda as performance art, fact-checking as monetization strategy. Truth became just another ad format. The goal is “manufacturing consensus.”

The SDA's internal reports confirm this cynical calculus; they actively used mentions in Western media and the work of fact-checking organizations as proof of the campaign's reach and success to secure further funding. The counter-FIMI community (investigative work by Western governments, researchers, or NGOs), acting in good faith, became a line item in their budget justification. The irony is that the SDA exploited even those well-intentioned efforts to validate their reach and extract further Kremlin funding.

Memes that Travel

One of the most unsettling insights from the report is that SDA treats narratives like marketing agencies treat slogans: disposable, A/B tested, optimized for reach. The question is not “Is it true?” or even “Is it persuasive?” but rather: “Does it travel?” If a meme about Zelensky being a cocaine addict outperforms a story about NATO encirclement, then guess what gets scaled? This is cognitive warfare without conviction, a click economy of disruption.

This ‘click economy of disruption” is perfectly illustrated by operations like ‘Storm-1516,’ which is a wake up call for Europe’s Cognitive Defense, used a sophisticated chain of distribution to launder its narratives. Fabricated content, from deepfake videos of politicians to staged interviews with amateur actors, would first be published via burner accounts or paid media in countries like Nigeria or Egypt before being amplified by a network of pro-Russian influencers to target European and American audiences. The process was less about believability and more about creating an untraceable, chaotic information flow.

The SDA's technical infrastructure rivals a mid-tier Silicon Valley firm: automated analytics dashboards, targeted ad buys, sentiment analysis, and tiered delivery mechanisms spanning fake influencers, cloned news websites, AI-generated video content, and bot-amplified Telegram channels. But what makes it effective is not the tools; it is the strategic clarity. Western institutions treat disinformation as a deviation from the norm. The Kremlin treats it as the operating system.

Another new report from the Atlantic Council’s Digital Forensic Research Lab (DFRLab) reveals this dispatch from the front lines of that system: “Putin is a leader of the sort which there is no more on Earth. He brought peace. Life has become better.” This wasn’t a real person speaking. It was one of thousands of automated Telegram comments designed not to provoke, but to permeate the digital spaces of occupied Ukrainian cities.

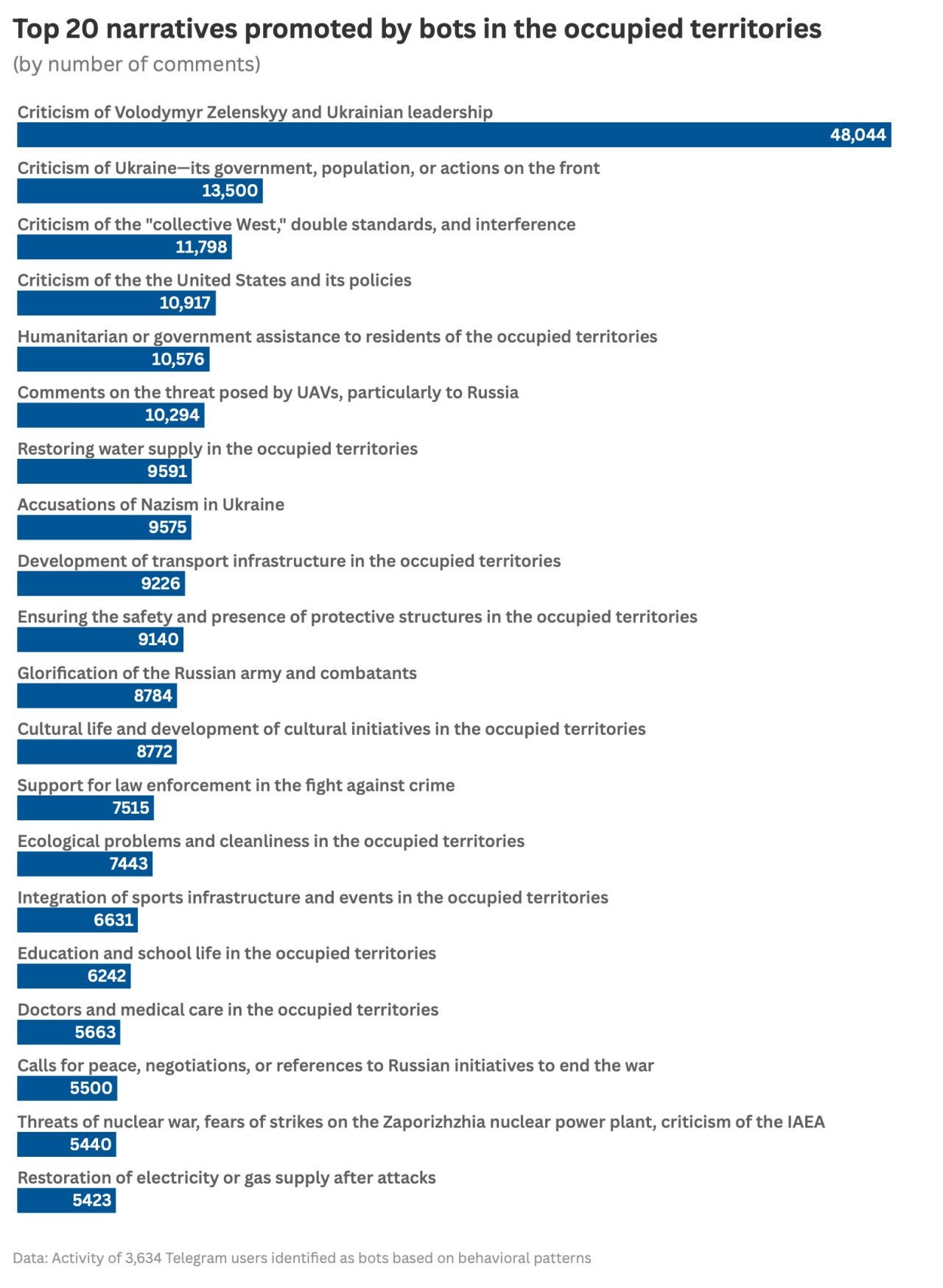

A recent investigation by DFRLab uncovered a network of over 3,600 bots responsible for more than 316,000 comments, using large language models to make state propaganda sound like a neighbor’s opinion. The strategy is sophisticated psychological conditioning: President Zelenskyy is a relentless personal target, while pro-Russian messaging is depersonalized, focused on abstract themes of stability and order. This is the operating system in action: a scalable, adaptable narrative infrastructure built not to win an argument, but to control the definition of what is “normal” in a war zone.

And the code of that system is simple: fracture, fragment, fatigue.

Whether it’s via a cloned version of Bild or a spoofed Ministry of Interior press release, the goal is not to win an argument, it’s to make argument itself feel futile.

This is the genius of what Pamment and Tsurtsumia describe: cognitive warfare that doesn’t need to convince you of Russia’s virtue. It only needs to convince you that virtue is a scam.

Hijacking our Youth

The deeper horror, however, is that this model is metastasizing, not just as a tactic but as a feature of modern politics. In Germany, for instance, Russian FIMI campaigns have actively worked to bolster the far-right AfD party, with leaked SDA documents revealing an explicit goal to increase their vote share. These campaigns thrive by hijacking and amplifying existing societal fissures around migration, climate policy, and institutional trust, demonstrating that the true target is not just an election, but the social contract itself.

And this is not a uniquely Russian playbook. The Czech intelligence service's 2024 report highlights similar influence strategies from China, alongside a deeply concerning trend of youth radicalization fueled by the very algorithms of our social networks. These platforms, with their profit-driven logic of engagement, inadvertently become the perfect delivery system for cognitive warfare, creating the fractured, polarized audiences that foreign actors are so adept at exploiting.

What then is to be done? The authors argue that the counter-FIMI community must shift from reactive to strategic. But this is not merely a call for better tech or faster takedowns. It is a call to recognize that we are no longer dealing with disinformation in the traditional sense. We are dealing with a market for epistemic entropy.

Truth, in this system, is not the goal. It is the casualty. The only way to fight back is not to “debunk” more efficiently but to restore a sense of epistemic cohesion: to reward not just content that spreads, but content that endures; to invest not only in surveillance of lies but in the curation of shared facts; to understand that attention is now a strategic resource, and like all resources, it must be governed.

The SDA is not just a threat. It is a provocation, a proof of concept for a world where the infrastructure of manipulation is fully normalized. It shows us what happens when perception becomes product, when democracy becomes a click-through rate, and when truth is outsourced to the highest bidder.

The answer is not just to ban the bots or block the ads. The answer is to reclaim the terms of reality.

Because in the end, the battlefield is not online.

It is the minds we inhabit, the identities we defend, the certainties we refuse to question. It is us, fragmented, distracted, and deeply susceptible to the illusion of control.

Stay curious

Colin

“deviation from the norm to an operating system” of “epistemic entropy” …would leave no Truth to be told, leaving societal confusion a norm without factual resolution. The people persuaded, not educated.

A very thought-provoking article to pause and reflect upon.

Excellent! Thank you Colin 💗🙏