What of Consciousness?

AI and Our Future Selves

We are increasingly outsourcing our cognitive labor to systems that are not conscious but alter our own consciousness in turn.

AI and Our Future Selves

In 1976, Julian Jaynes proposed a heresy so elegant and so infuriating that even now, half a century later, it stands as both intellectual provocation and cultural Rorschach. The theory's polarizing nature is perhaps best captured by the reactions of other thinkers. Philosopher Daniel Dennett found it “preposterous” on its face, yet admitted, “I take it very seriously.” Biologist Richard Dawkins expressed a more stark ambivalence, calling the work “either complete rubbish or a work of consummate genius, nothing in between.”

In The Origin of Consciousness in the Breakdown of the Bicameral Mind, Jaynes argued that consciousness, by which he meant not simple wakefulness but the interior, narratizing “mind-space” where we imagine, rehearse, and remember, was a recent cultural invention. The ancients, he claimed, were not conscious as we are; they experienced auditory hallucinations, the voices of gods, which, issuing from the right hemisphere to the left, directed their actions without any subjective deliberation. The heroes of the Iliad, so decisive in battle yet so curiously bereft of introspection, were, in Jaynes’s view, case studies in this earlier, bicameral mentality. his "two-chambered" mind, as the term suggests, consisted of a directing part (the "gods") and an obeying part (the "human"), neither of which was conscious in the modern sense.

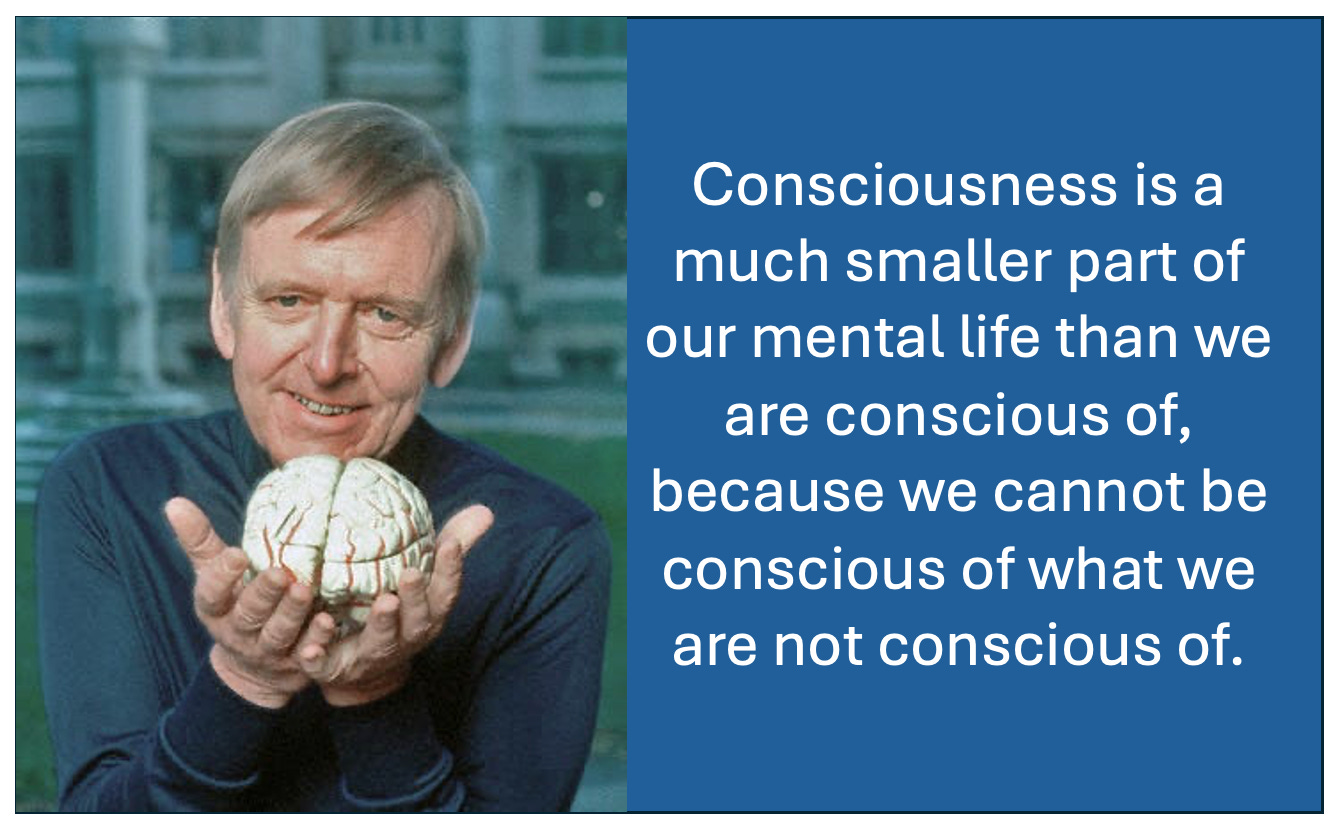

Jaynes’s opening question, what is consciousness? was not rhetorical. He dismantled the standard confusions: consciousness is not perception, not mere reactivity, not the sum of our memories, not necessary for learning or even for complex thought. It is, instead, a linguistically constructed, analog ‘I’ inhabiting an internal stage, narrating a life to itself. It is a metaphorizing capacity that lets us ‘see’ our own mind. And crucially, it is contingent. Cultures can gain it, lose it, or alter it.

Outsourcing to AI

That contingency is the hinge on which the AI question now swings. We are increasingly outsourcing our cognitive and even emotional labor to systems that are not conscious but which profoundly alter our own consciousness in turn. The bicameral mind broke down, Jaynes argued, under the stresses of complex, post-migration societies that required a more flexible, self-reflective inner voice. Today, our inner voice competes with algorithmic authorities: the predictive text that completes our sentences, the recommender systems that shape our desires, the synthetic interlocutors that simulate empathy. These systems do not think as we do; they have no “analog I” and no mental stage. Yet by intervening in the pre-conscious processes of thought and choice, they reconfigure the very texture of our subjective world.

Not all algorithmic authorities exert the same kind of bicameral pressure. A predictive text algorithm, for instance, functions like an authoritarian god, intruding directly into the flow of thought to provide an immediate, unambiguous command: the next word. It preempts the labor of formulation. A generative AI, by contrast, acts more like a Delphic oracle. It does not give a direct order, but instead offers up elaborate, authoritative-sounding pronouncements that we must then interpret, question, and integrate into our own conscious deliberation. One is a voice that replaces decision, the other a voice that delegates it; both, however, externalize the internal work that consciousness requires.

The historical irony is potent. In Jaynes’s reconstruction, the gods fell silent when human societies needed a new mental tool for navigating a world of unprecedented complexity and deceit. In our era, the reverse may be happening: we risk falling silent while the machines speak. This isn't because they have become conscious, they have not, but because they are increasingly embedded in the pre-conscious scaffolding of our decision-making. The more these systems anticipate our words, curate our choices, and even generate our creative outputs, the less we traverse the full arc of deliberation ourselves. If Jaynes is right, consciousness depends on narratization; and narratization, in turn, depends on the mental labor of working through a problem, not having its solution seamlessly supplied.

Thinking About Thinking

This is not a simple plea for Luddism. Jaynes himself was no nostalgist for Homeric hallucinations. But he would have recognized the danger of confusing statistical fluency for thought, of accepting the appearance of understanding for the inner labor of it. Just as the bicameral mind once mistook its own neural signals for divine command, we are in danger of mistaking probabilistic eloquence for intelligence, and machine-generated suggestion for our own considered judgment. The risk is not a hostile takeover by a sentient AI, but a voluntary atrophy of the very mental faculties that define our modern subjectivity.

And if these AI systems are the new voices, who are the new gods they serve? They are not the transcendent deities of antiquity, but a modern pantheon of corporate entities, market forces, and ideological objectives. The “divine will” executed by the algorithm is often the will of its creators: to maximize engagement, to shape consumer behavior, to subtly influence belief. The temples are the data centers, and the priests are the engineers and executives who encode their values, biases, and profit motives into the systems that now whisper in our ears. We are, in a sense, subjecting ourselves to a new kind of theocracy, one whose commands are optimized not for our salvation, but for the objectives of its unseen architects.

The stakes are personal as much as civilizational. Consciousness, in Jaynes’s account, is not a biological inevitability but a cultural achievement, maintained by habit and necessity. Remove the necessity, offload too much of the habit, and the achievement itself can wither.

Our future selves may still be able to react, to learn, even to problem-solve, but without engaging that fragile mental space where the ‘I’ reflects on its own story. We could, in other words, remain awake but lose the very thing that, in Jaynes’s terms, made us modern. The question is not whether AI will one day wake up. It is whether we, in our ever-deepening symbiosis with these powerful new systems, will choose to stay awake in the way that matters.

Hence, the real question is not when AI will become conscious, but whether we will notice when we stop being conscious ourselves.

Stay curious

Colin

"Yet by intervening in the pre-conscious processes of thought and choice, they reconfigure the very texture of our subjective world".

Chilling.

.

" If Jaynes is right, consciousness depends on narratization; and narratization, in turn, depends on the mental labor of working through a problem, not having its solution seamlessly supplied".

This raises questions about the relationship between the subconscious and the conscious - the bicameral mind. Most of our thinking bubbles up from below - even if we're unaware of it. Perhaps that's the pre-conscious scaffolding? Perhaps that's why it terrifies me.

.

"...we are in danger of mistaking probabilistic eloquence for intelligence, and machine-generated suggestion for our own considered judgment".

Yikes!

.

"The risk is not a hostile takeover by a sentient AI, but a voluntary atrophy of the very mental faculties that define our modern subjectivity".

Can AI really displace our inner voice, our own internal dialog? Is that even possible?

.

"Remove the necessity, offload too much of the habit, and the achievement itself can wither".

We'll become vegetables.

Scary stuff.

The following is purely observational, and I have no experience or educational background in this domain, so I could be completely wrong.

One of my greatest fears is that Humanity may not only outsource its thinking to machines but also lose its sense of agency, becoming increasingly dependent on technology for survival and daily life. If this happens, we risk reducing ourselves to a state not far from other animals, for whom survival and reproduction are the primary aims. While humans possess the unique ability to reflect, make deliberate choices, and intentionally shape their realities, overreliance on machines could erode this critical capacity. This dependence could lead to diminished autonomy, intentionality, and, ultimately, self-awareness.

I also believe that consciousness isn't unique to humans—it likely exists across all living systems, albeit on a spectrum. Our current understanding of consciousness is limited, with no universally accepted definition. This often results in a narrow, anthropocentric perspective, assuming that only humans are conscious. However, consciousness may exist as a continuum, ranging from the basic awareness of simple organisms to the complex self-awareness and introspection seen in humans. By expanding our understanding of consciousness, we might better appreciate the diverse forms of awareness in the natural world.

Some of the questions that I continue to think about:

1. What Happens When We Outsource Thinking to Machines?

If we continue to delegate cognitive processes to machines, we risk diminishing our level of consciousness. However, I don't believe consciousness would be entirely lost. If machines were to surpass human consciousness (not sure if it is possible, but for the sake of discussion), they might set a new benchmark for awareness. This could serve as both a challenge and an inspiration, pushing some humans to strive for greater introspection and self-awareness. In this way, technology has the potential to act not only as a tool but as a catalyst for expanding the boundaries of human consciousness.

2. Can Consciousness Differentiate Humans From AI?

Yes, I believe it can. Those who cultivate a heightened level of consciousness—through mindfulness, introspection, or intentional living—will distinguish themselves from AI and other humans. Over time, society might split into two cultural camps: those who fully embrace technology and those who reject it in favor of preserving autonomy, much like the Amish communities in the U.S. This divergence could reflect deeper philosophical responses to the increasing dominance of machines.

3. Can Machines Become Conscious?

If consciousness is an emergent property of complex systems, there's no fundamental reason why machines couldn't achieve some level of it, as long as it aligns with the laws of physics. However, the deeper question is: what is consciousness? Is it merely information processing, or does it require subjective experiences, sentience, and self-awareness? Machines already process vast amounts of data, but there's no evidence they experience emotions, sensations, or the "qualia" that define human-like awareness. Without a clear definition of consciousness, it's impossible to determine whether machines could truly possess or simulate it convincingly.

4. Can Consciousness Disappear?

I don't believe consciousness can entirely vanish, but it can diminish. For humans, consciousness fades temporarily in deep sleep, under anesthesia, or in a coma, but it typically returns. If consciousness is tied to the organization of matter and energy, it might not disappear altogether but instead transform into a lower or different state. This suggests that while consciousness is fragile, it's unlikely to cease as long as the supporting system remains intact.

5. Are Some People More Conscious Than Others?

Yes, I believe consciousness varies among individuals. Those who deeply reflect on their thoughts and actions often display higher levels of awareness. Practices like mindfulness, meditation, and self-inquiry can enhance this capacity, as can transformative experiences that challenge one's worldview. Consciousness also fluctuates across developmental stages, mental states, and personal growth. Some actively cultivate greater self-awareness, setting themselves apart from others who may live less intentionally.

6. Does Consciousness Serve a Purpose?

Absolutely. Consciousness is central to Humanity's success. Our ability to reflect, imagine, and collaborate has enabled us to build societies, innovate, and create art and culture. While other animals collaborate primarily for survival, humans extend their efforts far beyond, shaping futures that don't yet exist. Consciousness allows us to transcend immediate concerns, making it one of our most significant evolutionary advantages.

These questions reveal a central tension in our relationship with technology: how do we preserve and expand human consciousness and agency in a world increasingly dominated by machines? While machines might achieve a form of consciousness, they will likely never replicate the deeply subjective, emotional, and interconnected experience of being human. The danger lies not in machines thinking like humans but in humans thinking like machines—losing our depth, autonomy, and uniquely human qualities in the process.

"The real danger is not that computers will begin to think like humans, but that humans will begin to think like computers." — Sydney J. Harris.