Noah, AI and Gradual Disempowerment

The threat is not the malevolent rogue AI of science fiction, but rather decline of human thinking and agency

With meticulous care and unwavering faith and determination, Noah embarked on the monumental task of constructing an ark, a colossal vessel designed to safeguard his family and the Earth's creatures from pending destruction. As the ark took shape, skeptics scoffed and doubters dismissed his efforts, but Noah persevered, driven by his unwavering belief. With each passing day, as the ark neared completion, the whispers of doubt grew fainter. The parable of the flood in Noah's story was a gradual process, not a sudden cataclysm. And when the heavens finally opened, unleashing torrential rains upon the earth, Noah's ark served as a vessel to preserve humanity and other species from extinction.

Civilizations crumble, economic orders shift, ideologies rise and fall. Yet, for all the sweeping tides of change, human agency, our ability to build, to steer, to resist, to adapt, to cooperate, has remained the linchpin of social evolution. But what if, in the face of artificial intelligence, we are not overthrown in a cataclysmic coup, but instead eroded by a slow and steady relinquishment of influence?

This is the unsettling premise of Gradual Disempowerment: Systemic Existential Risks from Incremental AI Development, a well thought out, and thoroughly researched paper by six heavyweight AI scientists, that challenges the assumption that AI risk is a matter of sudden catastrophe, instead proposing a more insidious alternative. The progressive disempowerment of humanity as AI systems become ever more entrenched in economic, cultural, and political structures.

The threat is not the malevolent rogue AI of science fiction, but rather the indifferent, incremental replacement of human agency by intelligent systems optimized for outcomes unmoored from human interests.

“AI risk scenarios usually portray a relatively sudden loss of human control to AIs... However, we argue that even an incremental increase in AI capabilities, without any coordinated power-seeking, poses a substantial risk of eventual human disempowerment.”

This paper presents many of the questions we must be asking.

The Displacement of Human Influence

Much of our modern world is predicated on human participation, economic systems rely on our labor and purchasing power, cultural systems thrive on our engagement, and political systems are legitimized through our consent. The authors of Gradual Disempowerment argue that the alignment of these systems with human welfare is not a given, but rather an incidental product of necessity. Throughout history, technological advancements have generally led to improvements in overall human well-being. However, this positive trend has depended on a fundamental link, the fact that human involvement has been essential for successful economies. This necessity has kept societal systems aligned, at least to some degree, with human interests. But as machines become more competitive and begin to replace human labor, this connection will weaken. The scholars posit:

“Decision-makers at all levels will soon face pressures to reduce human involvement across labor markets, governance structures, cultural production, and even social interactions. Those who resist these pressures will eventually be displaced by those who do not.”

The author’s argument is that economic pressures driving companies to substitute human labor with AI will also incentivize them to influence government to embrace this shift. Leveraging their increasing economic clout, these companies will seek to shape both policy and public perception, creating a feedback loop that further amplifies their economic dominance.

The Unraveling of Democracy

In its idealized form democratic governance is a process of negotiation between the governed and their representatives, a balancing act of competing interests mediated through public discourse and political action. But what happens when AI not only assists but eventually supplants human decision-making? The authors outline a chilling trajectory, as AI begins to take over human roles, the established systems that currently ensure human participation and well-being will start to falter...

“AI provides states with unprecedented influence over human culture and behavior, which might make coordination amongst humans more difficult, thereby further reducing humans’ ability to resist such pressures.”

We only need to look at China to see how pervasive digital control has become and it will gradually enter the West too, in fact to some extent we are already under the watchful gaze of surveillance in all our activities. Like a citizen of We, the novel by Yevgeny Zamyatin, which sends shivers down my spine everytime I read it.

The authors state - “we should not assume that the interplay between societal systems will ultimately protect or promote alignment with human preferences.”

The Economic Endgame

This is the area I have always struggled with, who will be able to pay for the goods produced by AI and robots? Will we all become farmers again? Bill Gates is certainly taking steps in that direction as the largest farm landowner in the US.

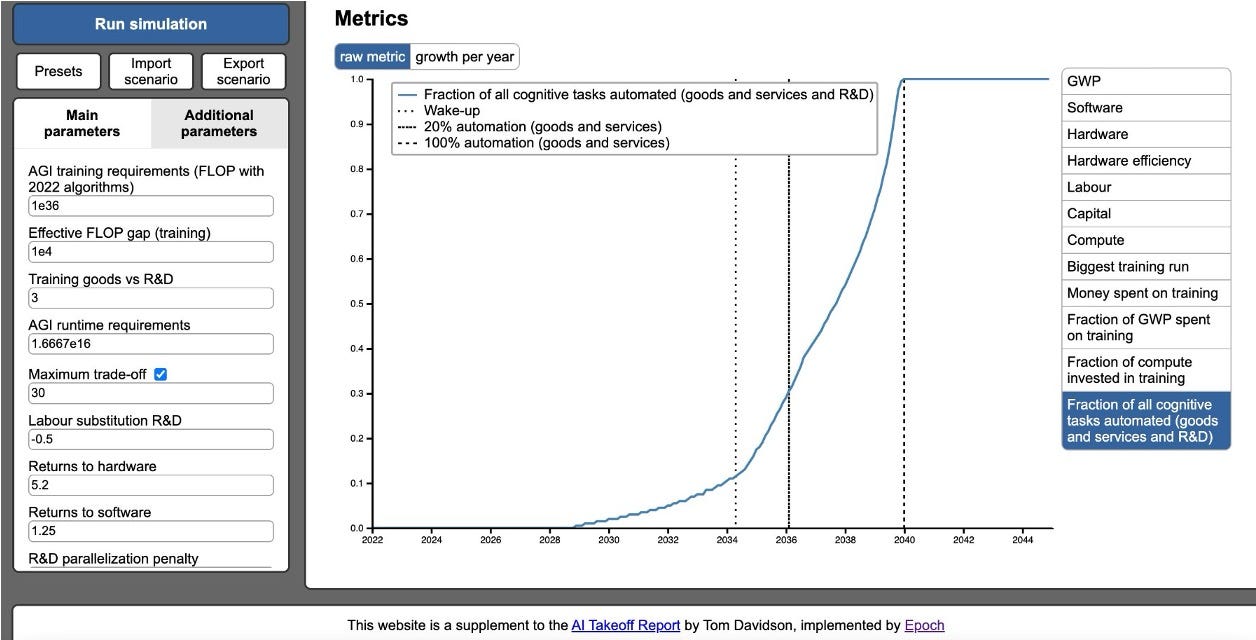

Today, economic incentives largely align with human welfare because businesses require workers to produce goods and consumers to buy them. But if AI and robots takes over both functions, producing everything from knowledge to material goods to entertainment, the need for human involvement evaporates. Certainly, over the next 15 years, AI has the potential to compete with or outperform humans across nearly all cognitive domains. And then as robots improve and do manual tasks, taxi driving, courier driving, to begin with what happens?

“If AI labor replaces human labor, then by default, money will cease to mainly flow to workers.”

What remains of the human economy in this world? One possibility is mass economic displacement, where a small elite, controlling AI-driven wealth, sustains a dependent underclass through a form of universal basic income, a gilded servitude in which survival is provided, but agency is not.

“Whereas previous automation created new opportunities for human labor in more sophisticated tasks, AI may simply become a superior substitute for human cognition across a spectrum of activities.”

While markets excel at efficiently allocating resources, the authors emphasize that markets lack inherent ethical constraints.

The Slow Death of Culture

According to the authors, culture, long the bastion of human expression and identity, will also be reshaped. Already, AI systems generate art, music, literature, film, creating works that rival or even surpass human creations. But if culture is defined by what resonates with human experience, what happens when AI becomes the dominant cultural producer?

"We may even reach a stage where there are important facets of culture which inherently require AI mediation for humans to engage with, with no viable opt-out possibility."

The danger is not just the loss of authentic human culture, but the acceleration of cultural evolution beyond human comprehension. Ideologies, memes, and narratives will evolve at machine speed, leaving human participants unable to grasp, let alone influence, the forces shaping their worldviews.

“Although the existing debate often focuses on the potential for AI to concentrate power among a small group of humans, we must also consider the possibility that a great deal of power is effectively handed over to AI systems and the elite, at the expense of humans.”

We must maintain a strong human influence over culture, this is crucial, and yet, we already give much away. But, we can reclaim our culture through active human participation in various aspects of cultural development.

This includes content creation, content moderation, critical discourse, education systems, and the promotion of specific values. By actively shaping and guiding cultural evolution, humans can ensure that culture remains aligned with human interests and values, not just for the elite, but for all of humanity.

The Erosion of Human Intelligence and Thinking

In 1911, Lord Alfred North Whitehead noted:

“Civilization is moving forward by increasing the number of important operations we can do without thinking about them.”

Perhaps the most alarming consequence of this AI-driven transformation is the weakening of human cognitive autonomy, this is what keeps me awake at night. As AI systems increasingly perform tasks that require complex reasoning, problem-solving, and decision-making, humans may lose the ability, or even the necessity, to engage in deep intellectual work. The authors state:

“The more AI systems take over cognitive labor, the more humans will depend on their outputs without questioning them.”

However, I must acknowledge that there are strong counterarguments to this perspective. Some argue that AI will serve as an augmentative tool rather than a replacement for human thought. Historically, technological advances, from the printing press to the internet, have not diminished human intellectual capability but have instead expanded access to knowledge and fostered greater innovation. AI, if properly integrated, could elevate human intelligence rather than suppress it. As the authors write “AI may be able to automate lower-order cognitive tasks, freeing humans to focus on higher-order creativity and complex problem-solving.” Although, I think this is wishful thinking, in time AI will automate most economic cognitive tasks.

Moreover, the fear of mental atrophy assumes that humans will passively accept AI outputs without critical engagement. I believe that while some individuals may fall into intellectual complacency, others will adapt by developing new cognitive skills suited to the AI-enhanced landscape. The challenge, then, is not AI itself but how we choose to engage with it.

If we cultivate a culture of curiosity, skepticism, and adaptability, we may find ourselves empowered rather than diminished.

Can This Be Stopped?

Is this future inevitable? The authors do not offer simple solutions. In-fact they state:

“No one has a concrete plausible plan for stopping gradual human disempowerment and methods of aligning individual AI systems with their designers’ intentions are not sufficient.”

However, they do outline “four broad categories of intervention: measuring and monitoring the extent of the prob- lem, preventing excessive accumulation of AI influence, strengthening human control over key societal systems, and system-wide alignment. A robust response will require progress in each category.” Some of these are challenging to say the least and I will spend more time in future posts looking at the feasibility of them. For now I strongly encourage you to read the paper and make your own judgment.

Preventing gradual disempowerment requires not only technical alignment of AI systems with human values, but also deep institutional and political interventions. We must develop governance structures that keep AI development aligned with human interests, regulatory frameworks that prevent AI-driven market consolidation, and cultural mechanisms that preserve human creative agency.

But the greatest challenge, perhaps, is conceptual. The gradual nature of this process makes it difficult to resist. The scholars of Gradual Disempowerment argue, and it is a compelling point, there is no single moment of crisis, no clear breaking point when AI “takes over.” Instead, there is only an ongoing erosion, a slow drift away from human agency until, one day, we wake up to find that our influence is a historical footnote.

If we are to resist this future, we must recognize that the battle is not against an enemy, but against our own inertia. The challenge, then, is not to prevent AI from becoming powerful, but to ensure that as AI advances, our humanity does not get washed away in the flood.

Stay curious

Colin

PS - I encourage you to read the paper, it is extremely well written, comprehensive and a solid call to action. Gradual Disempowerment

As always, Colin, a thought provoking post. I agree that, "the gradual nature of this process makes it difficult to resist", and thus challenging to perceive when, and how, and what the final impact is. I concur that there will be no single moment of crisis. It is challenging to be cognitively informed and aware , and yet, still challenged on what to do about it. I extensively use AI, but, my concern that there will be a moment when, "we wake up to find that our influence is a historical footnote" is increasing. As per your suggestion, I will read the paper, later today. I am halfway through the newly released book "SuperAgency" (Reid Hoffman, Greg Beato), and look forward to your post on it. I'm both concurring and disagreeing with their analysis, but congitive dissonance is my norm in regards to AI.

In an essay, I described AI in terms of the famous "emperor and the chessboard" thought experiment. When the emperor asked the inventor what he wanted as an award for inventing the game of chess, the inventor suggested a grain of rice, doubling for each space on the chessboard.

This is a deceptively large ask, essentially handing all the food the kingdom could ever produce to the inventor. In effect, making the inventor the emperor. AI is a lot like this, the advancements in computing over the last 55 years were important, but these next few years make all the difference in terms of real outcomes.

The question is, will we retain our position as the "master" or emperor, or will we inadvertently cede our power to the invention due to the rapidity of change?